Mac OSX has a great built-in feature called Time Machine, which is designed to provide simple backup & restore functionality for your system. Time Machine does more than just keep a most-recent backup handy; it keeps track of changes to your files on a regular basis, and allows you to go back in time to a prior state of your filesystem to recover files that were lost — even those that were deleted intentionally.

Time Machine can write backups to an external drive (USB, FireWire, or Thunderbolt), or to a network device. Apple sells a network device called Time Capsule that works with Time Machine, but other vendors also provide NAS devices that integrate directly with the Time Machine software on the Mac client.

I’ve been Mac-only at home for six years, and have used Time Machine ever since it first appeared in Leopard, in 2007. Last year, I upgraded my backup strategy to point all machines to a Synology DS411j NAS. This has been an awesome little device, and I use it for more than just backup/restore.

Backups are pretty darn important

Your data is really important to you. Trust me. Maybe it’s your family photos or home movies. Or tax returns. Or homework. Whatever it is, it’s critical you keep at least two copies of it, in the event of accidental deletion, disk failure, theft, fire, or the zombie apocalypse.

Scott Hanselman has a nice writeup on implementing a workable backup and recovery strategy. I’ve done that, and you should do the same. If you use a Mac, Time Machine should be part of your strategy, and it’s a heck of a lot better than no backup strategy at all. But be very wary of Time Machine, because it ain’t all roses.

Time Machine, In Which There Are Dragons

Time Machine is quite clever, and uses UNIX hard links to efficiently manage disk space on the backup volume. Backups are stored in a sparse bundle file, which is a form of magic disk image that houses all backup data.

But, I swear, it is sometimes just too magic for its own good. It is decidedly not simple under the hood, and when it fails, it fails in epic fashion. See this for some examples.

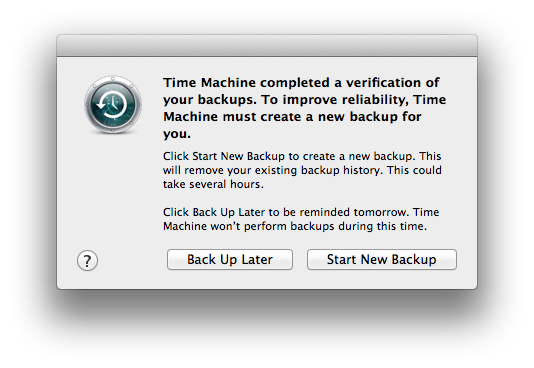

I’ve had at least five backup failures that I blame on Time Machine in the past four years. In OSX Lion, one kind of failure shows up like this:

There is no recovery option provided to you. If you say “Start new backup”, it deletes your old one and begins anew. If that’s terabytes of photos/video/music/whatever, be prepared for a very long wait. Perhaps days, depending on your backup data set size, network speed, disk speed, and phase of the moon. Okay, maybe not the last part, but you never know.

And in the meantime, your backup system is gone. At this point, it is obvious that having data in at least three places is necessary. (Note that there are techniques for repairing the Time Machine backup volume. Dig out the solder and oscilloscope first, though).

You didn’t do anything, but Time Machine broke. That is completely unacceptable for a backup system.

It’s quite possible that I’m doing it all wrong. And the problems may not be Apple software errors; they may be a function of Apple+Synology, or just Synology. But that is beside the point. Any backup strategy that can fail and irrecoverably take all your data to Valhalla is a horrible strategy. I need something that cannot fail.

A better strategy, with 73% less insanity

As it turns out, I have never really cared about the save-old-versions-of-files feature of Time Machine. I have used it to recover entire volumes — twice, both during machine upgrades. Recovering an inadvertently deleted file is rather rare for me, but I suppose I do care about that feature a little bit.

Time Machine sparsebundle files are opaque to the average user. You cannot open them up, peek inside, and grab the files you need. You need the Time Machine client, and when it encounters an error with the backup file, it offers no choice but to abort and start over.

This is why I use rsync. With a little bit of Time Machine still involved for added spice. You know, just to keep things interesting.

Plus, rsync sounds cooler.

Rsync is a command-line tool, available for several platforms, and included with Mac OSX. In its simplest form, it just copies files from one place to another. But it can also remove files no longer needed, exclude things you don’t care about, and work across a network, targeting a mounted volume or a remote server that supports SSH. Which is how I use it.

The end result of an rsync backup is a mirror of your source data. Readable by anything that can read the format of the target filesystem. This part is critical. Backups are irrelevant if nothing can recover them. A bunch of files in a directory on a disk is accessible by just about everything. Time Machine sparsebundles require Time Machine, on a Mac. Files in a directory can be read by any app or OS. Thisincreases your odds of recovery by, well, a lot.

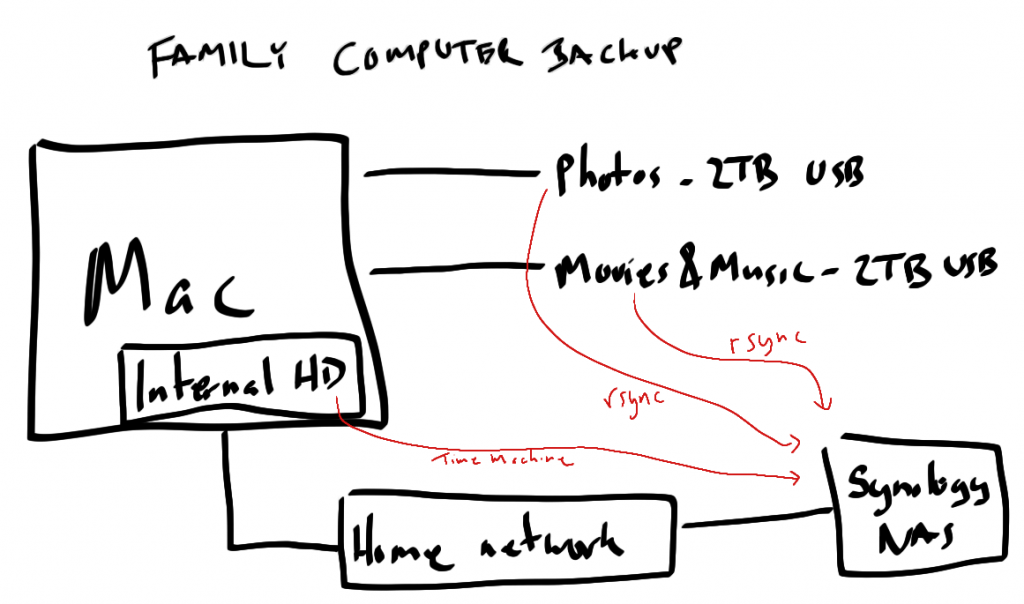

I have a few computers around the house. Our primary family computer is an iMac, and has one internal disk and two USB external disks. The internal disk has all the user folders, documents, applications, and OS files. The external drives contain photos, movies, music, etc.

My backup strategy has the internal disk backed up to my NAS using Time Machine, and the the external disks backed up to the same NAS using rsync.

Some notes:

- The Internal HD volume is less than 100GB, and the backup executes automatically every hour.

- I wrote simple shell scripts to automate the rsync commands. I execute the rsync scripts manually, but these are easy to automate.

I won’t go into too much detail on rsync usage (some resources that might help: 1, 2, 3), but here’s how I backup an entire external volume using rsync:

rsync -av --delete --exclude ".DS_Store" --exclude ".fseventsd" --exclude ".Spotlight-V100" --exclude ".TemporaryItems" --exclude ".Trashes" /Volumes/your-local-volume-name-that-you-want-backed-up/ user-name@backup-server:/volume-name-on-server/path-on-backup-server

The -av says “archive, with verbose output”. The –delete option says “get rid of anything on the server that’s no longer on my local machine” (be careful with this one). The –exclude options allow me to avoid backing up crap I don’t need. The username stuff allows me to log in to the server and perform the backup using that identity on the server.

Summary

Rsync can fail with network or disk hardware errors. Time Machine can fail with network, disk hardware, or buggy software errors. I prefer rsync for the really important stuff, and use Time Machine for the OS disk, which is relatively small and something I can recover from quickly after the inevitable Time Machine error.

Superb advice. One caveat, though. Snow Leopard is shipped with /usr/bin/rsynch which is at a buggy 2.6.9 version. I downloaded and built rsync version 3.0.9 and it works flawlessly and with no spurious permissions errors. Of course, 2.6.9 recognizes the -E option to save extended attributes, while 3.0.9 uses the -A and -X options to do the same. (I wonder if Time Machine is using the buggy old rsync and that’s why it sometimes fails for no apparent reason????)

Thanks Brian, that’s a great suggestion. I’ll have to give the new rsync a try.

Hello, I am new to rsync and don’t know if I should use the option -E or not for extended attributes. Could you please give me some advice? I am backing up from Snow Leopard with rsync 2.6.9 on a Synology NAS.

At the moment I just use rsync -av and I get quite many permission errors, it seams to back up just right thought. I be installing 3.0.9 soon.

I’m not terribly clear myself on the appropriate usage of -E (or -A -X depending on version; see note above from the other Brian). However, permission errors may be able to be addressed by using sudo. Try sudo rsync -av. You’ll need to supply your password.

The problem is that rsync is really really slow at scanning your entire file system and will cause massive I/O for that period. This is bad on a number of levels. It will also correspondingly bang the crap out of your network.

Ultimately the biggest failure point I’ve seen is the hard-drives themselves. Rsync or time machine doesn’t seem to make that much difference, most backup drives are crap drives, and if you’ve got terabytes of data, then backing up over the network is a pretty silly idea. The best kind of backup is one that’s on multiple different devices and on reasonable quality drives.

@Alex: You would only have that I/O problem if you do a full back-up on every pass/run of rsync, versus an incremental back-up, where you’d only have that I/O on the initial sync.

There’s an option for rsync, “–bwlimit=1000” that will limit your disk and network I/O; this is to be entered in kilobytes. rsync is designed for being very fast and efficient over the network with incremental back-ups. Using this option should keep your back-up process (rsync) from slowing down your machine.

Have a good one.

Time rsYnc Machine (tym)

http://dragoman.org/tym

This might be useful.

Regards

C.

Thanks for the tip,

I’d just received the third “improve-reliability/oops-lost-your-backup” message in 12 months, I’m done with Time Machine.

Thanks for the great post! I had similar problems with Time Machine and I am also a big fan of rsync. My backup solution is now mainly based on rsync (though I am still running Time Machine). I do daily scheduled backups of my Macs on my NAS and the NAS is synced weekly over the internet to an offsite NAS.

I wrote a script to facilitate everything and called it Space Machine ;-).

I have to posts on my blog about my backup solution. You can also get my script there:

http://www.relevantcircuits.org/2013/03/space-machine-made-public.html

http://www.relevantcircuits.org/2013/02/a-space-machine-to-safe-immaterial.html

Hope this is interesting for people here!

Hi Brian, Many thanks for the information you provided. I have been working on an rsync problem where I would get an error message when copying info out of a disk image / sparse image file. In your example you excluded an bunch of files – I copied what you did and my rsync is now working. THANK YOU!!!!!! I can now get on with my life 🙂 Much appreciated. Terry

Hi Brian,

This second post is to give a bit more detail in order to help others that have had same problem that I did.

The error message I got was:

rsync error: some files could not be transferred (code 23) at /SourceCache/rsync/rsync-42/rsync/main.c(992) [sender=2.6.9]

I have a private folder the my wife and I share and I wanted to put it into an encrypted sparse image file. That rsync completed fine. I then wanted to be able to use rsync to synchronize the private folder on my hard drive with another encrypted sparse image file on a network drive. This second copy always failed regardless of destination folder type.

When I saw that you excluded certain files – I tried it and it worked fine for me.

Again many thanks for your post.

Terry

I am personally using rsync in preference to Time Machine for backing up to a non-HFS+ drive (a NAS box), I do have to say though that I’ve tried it both ways and Time Machine is *much* faster. For a large backup (~1,000,000 files at ~3tb) time machine can calculate changes and begin backing up very quickly, regardless of what has actually changed, while rsync will only start quickly if you’re lucky and the changed files are some of the first ones processed, its transfer speeds are also much slower (five or six times slower!).

In the end I still went with rsync to eliminate the sparse bundle on my NAS, plus it means that if I setup my NAS to backup online then I’ll actually be backing up a useful file-structure, rather than a disk image that needs to be downloaded in its entirety no matter how many files I want to restore. But this is in addition to a Time Machine backup on an external drive directly connected to my machine, as it gives me the change history and pretty GUI to enjoy.

No sooner than I posted my above comment than I found a possible work-around; one of the reasons that Time Machine can sync so quickly is because it uses Spotlight databases to quickly determine what’s changed.

Well we can actually do the same thing with rsync like so:

mdfind -interpret “modified:>=18/4/13” -onlyin /foo/bar > /tmp/rsync_files

rsync -a –files-from /tmp/rsync_files /dev/null /target/folder

It requires some extra processing to ensure that all the files returned are valid (spotlight sometimes returns weird extra results), but it reduce to the initial checking time to a fraction of what it currently is. Simply save a timestamp somewhere to feed into the interpret parameter and you can enjoy much smoother backups; doesn’t help with the relatively poor transfer speed, but it lets a huge comparison execute in seconds instead of hours.

Been using rsync for years. My parameters list is ‘vauxhizP’

$ rsync -vauxhizP –delete source destination

-a is archive mode

-u is update mode. Only copies necessary files, already backuped-and-not-modified files are not copied. Useful to save time!!

-v verbosity

-x for being careful with the filesystem. I use it if backup to an external drive with a different filesystem (useful for networks too)

-h human readable output, together with

-i itemize changes, together with

-P (–partial —progress). These three allow me to watch the backup as it occurs, and -P also keeps partially transferred files. If I do a batch backup (one that I will not be seeing) I disable these three or I redirect the stdout to a log file ($ rsync -vauxhizP –delete source destination > log.txt)

-z compress files during transfer. Saves sending time for networking backups. I disable it on local backups.

–delete was explained. Is for deleting files that do not exist the source side but are present in the destination.

Another useful option is -b for making incremental backups (you can also set it to a different DIR, and also combined with “date command” you can set automated backups to folders arranged by date).

I hope to help someone with my kit of usefull parameters 😉

I updated my rsync backup script “Space Machine” (see above) today. It now provides the option to keep an archive of modified or deleted files, thus providing a backup history.

The archive may be optionally compressed after each synchronization/backup run and archived files are automatically deleted after a set period of time.

You may download the updated script here:

http://www.relevantcircuits.org/2014/01/space-machine-extended-keep-archive.html

Hi all,

I am a Time Capsule user and I faced a problem which made me realise that Time Machine is useful, until the day you really need it. So roaming around the web for alternative solutions, I found the rsync an interesting one, but it still doesn’t seem to resolve “my” problem, which I believe can be so common.

In short, Time Machine gives no warn when it doesn’t copy an unreadable file, i.e. because it is on a faulty sector. So you don’t realise the file is unreadable until you don’t try to open it. This means that if you don’t open the file for months, or even years, you just don’t know your file is definitively gone. Because when you go to look for it in the time machine backup, you will notice that the file is not there. So the file is lost, with no advise.

Of course, in some cases the file can have been backed up when it was readable, so in the older backups you can find a copy of it, but because time machine deletes the old versions of the backups when the volume is full, what happens is that in all those cases you have lost a file that you where sure to have in your backup.

I believe this is even worse than all other errors, of which in one way or another we are aware as they are noticed. Here you lose files in a totally unconscious way.

And what about rsync? Does it notices about unreadable files?

I’m planning to backup to a USB drive plugged into my router using rsync and the spotlight database trick posted earlier. For file attributes & permissions etc, should I use format the drive using a Mac OS format, or is something like FAT ok (assuming I have no really big files)?

Hi,

Thank you for the helpful article however I have some confusion; may be someone can help me out. I have dual drive (SSD + HD in CD-ROM drive):

– Primary SSD: “Macintosh HD”

– Secondary: “Dual HD”

I am using the following command to backup both drives (rsync version 3.1.1 | OS X Mountain Lion)

./rsync -av –delete –exclude “.DS_Store” –exclude “.fseventsd” –exclude “.Spotlight-V100” –exclude “.TemporaryItems” –exclude “.Trashes” /Volumes/Dual\ HD/ /Volumes/Backup/Dual\ HD/

./rsync -av –delete –exclude “.DS_Store” –exclude “.fseventsd” –exclude “.Spotlight-V100” –exclude “.TemporaryItems” –exclude “.Trashes” /Volumes/Macintosh\ HD/ /Volumes/Backup/Macintosh\ HD/

The confusion is with Macintosh HD backup. /Volumes/Macintosh\ HD/ has more /Volumes/Macintosh\ HD and that keeps going on.

My-MacBook-Pro:Macintosh HD user$ pwd

/Volumes/Macintosh HD/Volumes/Macintosh HD <<<— And I can again do "cd Volumes" and again "cd Volumes"

So when rsync backs it up I have multiple copies of "Users" and all folders within that. This causes my external drive (1TB) to be full where as my total usage (both disk) = ~450GB.

Also looks like rsync is not excluding what is excluded in the command line. I hope I explained it clearly. Thank you in advance for any help.

I’ve been using rsync backup to Synology NAS (DS411j in my case) for years, it’s great.

BUT, you are doing it the most slow way imaginable. Just put on synology resource monitor while you do rsync the way you write in the article above, and you’ll see the CPU is stuck at 100% all the time, and the transfer is slloowww. To make it faster, mount the drive first via SMB and then rsync to the local mount point on the mac. That is about 5x faster for free 🙂

Here is my script to do that.

—

curl http://192.168.1.5

mkdir /Volumes/ds411j-pics

mount_smbfs //user:pass@192.168.1.5/synosharename /Volumes/ds411j-pics/

rsync -va –exclude=”iPod Photo Cache” /Volumes/1TB\ WD10JPVT/TCP/ /Volumes/ds411j-pics/TCP/

umount /Volumes/ds411j-pics

—

The first curl command wakes up the unit from sleep in time for the mount command to succeed (ie it dos nothing).

Hope this helps.

Thanks Tim, this is great. Since I first posted this, I’ve had my set of data to back up grow to be quite large, and it has certainly become slow. (I run it nightly, so not a big deal, but still). I’ll try your SMB recommendation.

2018, still relevant. Time Masheen is still the same disaster it always was. I strongly suggest you throw pax in the mix if you want true incremental backups.

So basically, you run pax *first*:

pax -rwl “$LOCATION/hourly/hourly.0” “$LOCATION/hourly/hourly.1″

And then you run rsync:

$RSYNC -v $RSARGS –delete –numeric-ids –relative –delete-excluded –exclude-from=”excludes.txt” / $LOCATION/hourly/hourly.0/

And now you can recover older versions of your files.

#!/bin/sh

# DESTINATION DIRECTORY GOING FROM VOLUME/SOURCE TO VOLUME/GIGABYTE/SOURCE/DESTINATION

# DESTINATION GET SAME PATH AS SOURCE

if [ $# -ne 2 ]; then

echo 1>&2 Error, Usage: $0 sourcePath destDir

exit 127

fi

goingTo=”Gigabyte”

theDate=`date ‘+%y%m%d%H%M%S’`

destPath=”/Volumes/$goingTo/$1″

theIncr=”$destPath/$2-$theDate”

# scriptOut=”$destPath/progress.txt”

scriptOut=”/Volumes/$goingTo/progress.txt”

echo “~/bin/mybook”

echo “————————————————————” > “$scriptOut”

echo; echo; echo “/Volumes/$1$2 -> $theDate” >> “$scriptOut”

rsync -ablPvz –backup-dir=”$theIncr” –delete –progress “/Volumes/$1$2/” “$destPath/$2” >> “$scriptOut”

echo “————————————————————” >> “$scriptOut”

cat “$scriptOut”

exit 0